OK, rant time.

Way back in the day (I mean 2001 or so), I used to use rpm-based distros. Red Hat, Mandriva – or rather Mandrake- and they worked fine. As long as you didn’t have to install any packages. To be fair, this was in the early days of package managers and the like, and I was a novice Linux user at the time. Mandrake had put in a good effort with urpmi, but I still had to visit sites like http://rpm.pbone.net/ and http://rpmfind.net/ very often to find this or that package.

Then, in 2004/2005, I discovered Ubuntu. (The OS, not the philosophy. Ha ha.) It was a world of difference. Need a program? apt-get install program would automagically fetch and install it for you. Don’t know the name of the package, or exactly what you’re looking for? apt-cache search can help. If that package you want installed has dependencies, and those have dependencies? No problem, everything gets pulled in and the proposed changes are listed for you. The other advantage was that seemingly any program I could possibly want was available in a Debian/Ubuntu repo.

Fast forward to today. I’ve pretty much been using Debian based distros since then, although I have tried Arch and Slax, and possibly many others that I can’t remember right now. All my servers run either Debian or Ubuntu Server, and my Linux PCs are Ubuntu or Arch. Package management has become so easy that I rarely ever have to worry about it, unless I’m trying to make some major changes outside of repo packages.

Recently, however, I’ve started using some RPM distros again, to see how things have been on that side of the fence. It’s been mostly CentOS and a few CentOS/PBX distros (Elastix, Trixbox, pbxinaflash…). I have to say though, I can’t believe the state of the package management system. CentOS has got yum, which seems to be good in principle, but somehow I’ve seen it massively fail in ways that Apt never has for me. The first issue is not really to do with the package manager, but more the repositories.

For example, we had a service on a server at work that absolutely required “Arial”. In Ubuntu or Debian, all you have to do is enable the non-free repo, or an Arch, use one of the excellent AUR frontends such as yaourt. Then install msttcorefonts (Debian) or ttf-ms-fonts (Arch). The package manager will fetch the MS fonts package and its dependency, cabextract. It then downloads each of the fonts’ self-extracting EXEs from sourceforge, cabextracts them, then installs them to the appropriate fonts directory. Now, on the CentOS 5 server, no such luck.

$ yum install msttcorefonts

Loaded plugins: downloadonly, fastestmirror

Loading mirror speeds from cached hostfile

* base: centos.mirror.nexicom.net

* extras: centos.mirror.nexicom.net

* updates: centos.mirror.nexicom.net

Excluding Packages from CentOS-5 - Addons

Finished

Excluding Packages from CentOS-5 - Base

Finished

Excluding Packages from CentOS-5 - Extras

Finished

Excluding Packages from CentOS-5 - Updates

Finished

Setting up Install Process

No package msttcorefonts available.

Nothing to do

$

Awesome. Time to break out the manual package manager, AKA Google. Which brings me to the corefonts sourceforge project homepage, fortunately with clear instructions on how to install on an rpm-based system.

- Make sure you have the following rpm-packages installed from from your favourite distribution. Any version should do.

- rpm-build

- wget

- A package that provides the ttmkfdir utility. For example

- For Fedora Core and Red Hat Enterprise Linux 4, ttmkfdir

- For old redhat releases, XFree86-font-utils

- For mandrake-8.2, freetype-tools

- Install the cabextract utility. For users of Fedora Core it is available from extras. Others may want to compile it themselves from source, or download the source rpm from fedora extras and rebuild.

- Download the latest msttcorefonts spec file from here

- If you haven’t done so already, set up an rpm build environment in your home directory. You can to this by adding the line %_topdir %(echo $HOME)/rpmbuild to your $HOME/.rpmmacros and create the directories $HOME/rpmbuild/BUILD and $HOME/rpmbuild/RPMS/noarch

- Build the binary rpm with this command:

$ rpmbuild -bb msttcorefonts-2.0-1.spec

This will download the fonts from a Sourcforge mirror (about 8 megs) and repackage them so that they can be easily installed.

- Install the newly built rpm using the following command (you will need to be root):

# rpm -ivh $HOME/rpmbuild/RPMS/noarch/msttcorefonts-2.0-1.noarch.rpm

Sounds like fun. Let’s try and see if we’re lucky.

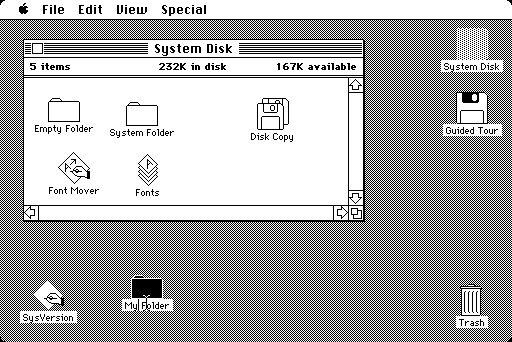

yum install wget rpm-build cabextract

![[screen 0_ sh] — ssh — 152×51](https://matthieu.yiptong.ca/wp-content/uploads/2011/12/screen-0_-sh-—-ssh-—-152×51-300x228.jpg)

Cool! rpm-build was installed! but wait, how about wget and cabextract? It didn’t mention those!

wget is probably installed, but let’s try anyway:

$ wget

wget: missing URL

Usage: wget [OPTION]... [URL]...

Try `wget --help' for more options.

OK, how about cabextract?

$ cabextract

sh: cabextract: command not found

Well then, that’s wonderful. Thanks for mentioning that you didn’t install cabextract, yum.

Fortunately the good people at corefonts provided a link to the download for cabextract, and fortunately, my server is i386 (I know it doesn’t seem like it from the screenshot), so I can use the pre-built RPM. (For those who need it, the x86_64 package) Now to the final step.

$ wget -O - http://corefonts.sourceforge.net/msttcorefonts-2.0-1.spec | rpm -bb msttcorefonts-2.0-1.spec

Executing(%prep): /bin/sh -e /var/tmp/rpm-tmp.77304

+ umask 022+ cd /usr/src/redhat/BUILD

[… a hundred or so lines…]

Wrote: /usr/src/redhat/RPMS/noarch/msttcorefonts-2.0-1.noarch.rpm

Executing(%clean): /bin/sh -e /var/tmp/rpm-tmp.22861

+ umask 022

+ cd /usr/src/redhat/BUILD

+ '[' /var/tmp/msttcorefonts-root '!=' / ']'

+ rm -rf /var/tmp/msttcorefonts-root

+ exit 0

Phew, that’s a lot of output. Well exit 0, that’s good. Aaand “Wrote: /usr/src/redhat/RPMS/noarch/msttcorefonts-2.0-1.noarch.rpm”. cool!

And finally:

$ rpm -ivh /usr/src/redhat/RPMS/noarch/msttcorefonts-2.0-1.noarch.rpm

Preparing... ########################################### [100%]

1:msttcorefonts ########################################### [100%]

$

(Another thing that bugs me – no success message! After all that, not even a Yay! Package installed!? I’m disappointed, rpm.)

For illustrative purposes, Debian:

# apt-get install msttcorefonts

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following extra packages will be installed:

cabextract ttf-liberation ttf-mscorefonts-installer

The following NEW packages will be installed:

cabextract msttcorefonts ttf-liberation ttf-mscorefonts-installer

0 upgraded, 4 newly installed, 0 to remove and 4 not upgraded.

Need to get 1103kB of archives.

After this operation, 2109kB of additional disk space will be used.

Do you want to continue [Y/n]? Y

[…]

All fonts downloaded and installed.

Updating fontconfig cache for /usr/share/fonts/truetype/msttcorefonts

Setting up msttcorefonts (2.7) ...

Setting up ttf-liberation (1.04.93-1) ...

Updating fontconfig cache for /usr/share/fonts/truetype/ttf-liberation

Wasn’t that easier? Also, a nice plain English message saying what was done: “All fonts downloaded and installed.” Take notes, rpm.

For completeness’ sake, Arch:

$ yaourt -S ttf-ms-fonts

==> Downloading ttf-ms-fonts PKGBUILD from AUR...

x PKGBUILD

x ttf-ms-fonts.install

x LICENSE

[…]

==> ttf-ms-fonts dependencies:

- fontconfig (already installed)

- xorg-fonts-encodings (already installed)

- xorg-font-utils (already installed)

- cabextract (package found)

[…]

Targets (1): ttf-ms-fonts-2.0-8

Total Download Size: 0.00 MB

Total Installed Size: 5.49 MB

Proceed with installation? [Y/n]

(1/1) checking package integrity [########################################] 100%

(1/1) checking for file conflicts [########################################] 100%

(1/1) installing ttf-ms-fonts [########################################] 100%

Updating font cache... done.

$

A bit more user interaction, but that’s the point of Arch.

So, to summarize:

Arch/Debian package management > rpm package management (CentOS).

And that’s the end of my rant for today.

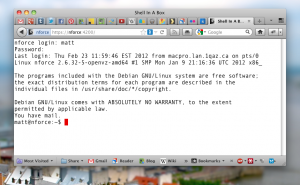

![[screen 0_ sh] — ssh — 152×51](https://matthieu.yiptong.ca/wp-content/uploads/2011/12/screen-0_-sh-—-ssh-—-152×51-300x228.jpg)