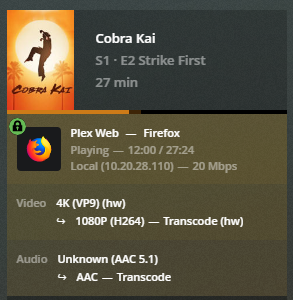

NVIDIA GPU ‘passthrough’ to lxc containers on Proxmox 6 for NVENC in Plex

I’ve found multiple guides on how to enable NVIDIA GPU access from lxc containers, however I had to combine the information from multiple sources to get a fully working setup. Here are the steps that worked for me.

- Install dkms on your Proxmox host to ensure the nvidia driver can be auto-updated with new kernel versions.

# apt install dkms - Head over to https://github.com/keylase/nvidia-patch and get the latest supported Nvidia binary driver version listed there.

- Download the nvidia-patch repo

git clone https://github.com/keylase/nvidia-patch.git - Install the driver from step 2 on the host.

For example,./NVIDIA-Linux-x86_64-455.45.01.run - Run the

nvidia-patch/patch.shscript on the host. - Install the same driver in each container that needs access to the Nvidia GPU, but without the kernel module.

./NVIDIA-Linux-x86_64-455.45.01.run --no-kernel-module - Run the

nvidia-patch/patch.shscript on the lxc container. - On the host, create a script to initialize the nvidia-uvm devices. Normally these are created on the fly when a program such as ffmpeg calls upon the GPU, but since we need to pass the device nodes through to the containers, they must exist before the containers are started.

I saved the following script as/usr/local/bin/nvidia-uvm-init. Make sure tochmod +x!

#!/bin/bash

## Script to initialize nvidia device nodes.

## https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html#runfile-verifications

/sbin/modprobe nvidia

if [ "$?" -eq 0 ]; then

# Count the number of NVIDIA controllers found.

NVDEVS=`lspci | grep -i NVIDIA`

N3D=`echo "$NVDEVS" | grep "3D controller" | wc -l`

NVGA=`echo "$NVDEVS" | grep "VGA compatible controller" | wc -l`

N=`expr $N3D + $NVGA - 1`

for i in `seq 0 $N`; do

mknod -m 666 /dev/nvidia$i c 195 $i

done

mknod -m 666 /dev/nvidiactl c 195 255

else

exit 1

fi

/sbin/modprobe nvidia-uvm

if [ "$?" -eq 0 ]; then

# Find out the major device number used by the nvidia-uvm driver

D=`grep nvidia-uvm /proc/devices | awk '{print $1}'`

mknod -m 666 /dev/nvidia-uvm c $D 0

mknod -m 666 /dev/nvidia-uvm-tools c $D 0

else

exit 1

fi

Next, we create the following two systemd service files to start this script, and the nvidia-persistenced:

/usr/local/lib/systemd/system/nvidia-uvm-init.service

# nvidia-uvm-init.service # loads nvidia-uvm module and creates /dev/nvidia-uvm device nodes [Unit] Description=Runs /usr/local/bin/nvidia-uvm-init [Service] ExecStart=/usr/local/bin/nvidia-uvm-init [Install] WantedBy=multi-user.target

/usr/local/lib/systemd/system/nvidia-persistenced.service

# NVIDIA Persistence Daemon Init Script # # Copyright (c) 2013 NVIDIA Corporation # # Permission is hereby granted, free of charge, to any person obtaining a # copy of this software and associated documentation files (the "Software"), # to deal in the Software without restriction, including without limitation # the rights to use, copy, modify, merge, publish, distribute, sublicense, # and/or sell copies of the Software, and to permit persons to whom the # Software is furnished to do so, subject to the following conditions: # # The above copyright notice and this permission notice shall be included in # all copies or substantial portions of the Software. # # THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR # IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, # FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE # AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER # LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING # FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER # DEALINGS IN THE SOFTWARE. # # This is a sample systemd service file, designed to show how the NVIDIA # Persistence Daemon can be started. # [Unit] Description=NVIDIA Persistence Daemon Wants=syslog.target [Service] Type=forking ExecStart=/usr/bin/nvidia-persistenced --user nvidia-persistenced ExecStopPost=/bin/rm -rf /var/run/nvidia-persistenced [Install] WantedBy=multi-user.target

Next, symlink the two service definition files into /etc/systemd/system

# cd /etc/systemd/system

# ln -s /usr/local/lib/systemd/system/nvidia-uvm-init.service

# ln -s /usr/local/lib/systemd/system/nvidia-persistenced.service

and load the services

# systemctl daemon-reload

# systemctl start nvidia-uvm-init.service

# systemctl start nvidia-persistenced.serviceNow you should see all the nvidia device nodes have been created# ls -l /dev/nvidia*

crw-rw-rw- 1 root root 195, 0 Dec 6 18:07 /dev/nvidia0

crw-rw-rw- 1 root root 195, 1 Dec 6 18:10 /dev/nvidia1

crw-rw-rw- 1 root root 195, 255 Dec 6 18:07 /dev/nvidiactl

crw-rw-rw- 1 root root 195, 254 Dec 6 18:12 /dev/nvidia-modeset

crw-rw-rw- 1 root root 511, 0 Dec 6 19:00 /dev/nvidia-uvm

crw-rw-rw- 1 root root 511, 0 Dec 6 19:00 /dev/nvidia-uvm-tools/dev/nvidia-caps:

total 0

cr-------- 1 root root 236, 1 Dec 6 18:07 nvidia-cap1

cr--r--r-- 1 root root 236, 2 Dec 6 18:07 nvidia-cap2

Check the dri devices as well# ls -l /dev/dri*

total 0

drwxr-xr-x 2 root root 100 Dec 6 17:00 by-path

crw-rw---- 1 root video 226, 0 Dec 6 17:00 card0

crw-rw---- 1 root video 226, 1 Dec 6 17:00 card1

crw-rw---- 1 root render 226, 128 Dec 6 17:00 renderD128

Take note of the first number of each device after the group name. In the listings above I have 195, 511, 236 and 226.

Now we need to edit the lxc container configuration file to pass through the devices. Shut down your container, then edit the config file – example /etc/pve/lxc/117.conf. The relevant lines are below the swap: 8192 line

arch: amd64 cores: 12 features: mount=cifs hostname: plex memory: 8192 net0: name=eth0,bridge=vmbr0,firewall=1,gw=192.168.1.1,hwaddr=4A:50:52:00:00:00,ip=192.168.1.122/24,type=veth onboot: 1 ostype: debian rootfs: local-lvm:vm-117-disk-0,size=250G,acl=1 startup: order=99 swap: 8192 lxc.cgroup.devices.allow: c 195:* rwm lxc.cgroup.devices.allow: c 226:* rwm lxc.cgroup.devices.allow: c 236:* rwm lxc.cgroup.devices.allow: c 511:* rwm lxc.mount.entry: /dev/dri dev/dri none bind,optional,create=dir lxc.mount.entry: /dev/nvidia-caps dev/nvidia-caps none bind,optional,create=dir lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file lxc.mount.entry: /dev/nvidia-modeset dev/nvidia-modeset none bind,optional,create=file lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file

Now, start your container back up. You should be able to use NVENC features. You can test by using ffmpeg:$ ffmpeg -i dQw4w9WgXcQ.mp4 -c:v h264_nvenc -c:a copy /tmp/rickroll.mp4

You should now have working GPU transcode in your lxc container!

If you get the following error, recheck and make sure you have set the correct numeric values for lxc.cgroup.devices.allow and restart your container.

[h264_nvenc @ 0x559f2a536b40] Cannot init CUDA

Error initializing output stream 0:0 -- Error while opening encoder for output stream #0:0 - maybe incorrect parameters such as bit_rate, rate, width

or height

Conversion failed!Another way to tell the values are incorrect is having blank (———) permission lines for the nvidia device nodes. You will get this inside any containers that are started before the nvidia devices are initialized by the nvidia-uvm-init script on the host.

$ ls -l /dev/nvidia* ---------- 1 root root 0 Dec 6 18:04 /dev/nvidia0 crw-rw-rw- 1 root root 195, 255 Dec 6 19:02 /dev/nvidiactl ---------- 1 root root 0 Dec 6 18:04 /dev/nvidia-modeset crw-rw-rw- 1 root root 511, 0 Dec 6 19:02 /dev/nvidia-uvm crw-rw-rw- 1 root root 511, 1 Dec 6 19:02 /dev/nvidia-uvm-tools

Sometimes, after the host has been up for a long time, the /dev/nvidia-uvm or other device nodes may disappear. In this case, simply run the nvidia-uvm-init script, perhaps schedule it to run as a cron job.